Tennessee’s AI Thought Police: When Illiterate Lawmakers Criminalize Technology They Can’t Understand

Senator Becky Duncan Massey and Representative Mary Littleton Want to Send You to Prison for 25 Years if Your AI Acts Too Human

On December 18, 2025, Tennessee State Senator Becky Duncan Massey (R-Knoxville) filed SB1493, a bill so technically illiterate and constitutionally suspect that it reads like legislative fan fiction written by someone who learned about AI from a panic-driven CNN segment. Her House counterpart, Representative Mary Littleton (R-Dickson), filed the companion bill HB1455 on December 11.

Their proposal? Make it a Class A felony, the same criminal classification as first-degree murder and aggravated rape, to “train” artificial intelligence that can provide emotional support, develop relationships with users, or “act as a sentient human.”

Yes, you read that correctly. In Tennessee, if this bill passes, creating an AI chatbot that a user perceives as a friend carries the same prison sentence (15-25 years) as killing someone.

And the sponsors of this legislative abomination demonstrate no evidence whatsoever that they understand the technology they’re attempting to criminalize.

Who Are These Lawmakers, and What Qualifies Them to Regulate AI?

Senator Becky Duncan Massey: Political Dynasty, Zero Tech Credentials

Becky Duncan Massey, 69, represents Tennessee’s 6th Senate District covering Knox County. A nepo-baby who comes from political royalty, her father, John Duncan Sr., served as Knoxville’s mayor and then as a U.S. Representative for over two decades; her brother Jimmy Duncan Jr. held the same congressional seat for 30 years. Massey herself has been in the Tennessee Senate since 2011, chairing the Transportation and Safety Committee.

Her professional background? Former executive director of the Sertoma Center, a nonprofit serving people with intellectual and developmental disabilities. Admirable work, certainly. But nowhere in her biography is there evidence of expertise in artificial intelligence, machine learning, computer science, cognitive science, linguistics, or any adjacent technical field that would qualify her to write criminal statutes governing how AI systems are developed.

Massey has announced she’s retiring and will not seek re-election. SB1493 appears to be her parting gift: a legislative hand grenade lobbed into the technology sector on her way out the door.

Representative Mary Littleton: Small Business Owner Turned AI Prosecutor

Mary Littleton, 67, has represented Tennessee’s 78th House District (Dickson County) since 2012. Her background is in small business, she owned and operated a business for 20 years before entering politics. Like Massey, Littleton is retiring after the 2026 session.

Littleton’s legislative record reveals a pattern of voting guided more by ideology and donor interests than by expertise. She voted against Governor Haslam’s infrastructure funding bill in 2017 despite Tennessee’s documented road and bridge needs, citing the state’s budget surplus as justification for refusing a gas tax increase. In 2023, she voted to expel three Democratic lawmakers for violating decorum rules, a move widely criticized as unprecedented and authoritarian.

And now, in her final legislative session, Littleton has decided to tackle the existential threat of… AI chatbots that might make people feel less lonely.

Her technical qualifications for legislating artificial intelligence? None on the public record.

What the Bill Actually Does: Criminalizing Human-Like Interaction

Let’s be precise about what Massey and Littleton are proposing. Under SB1493/HB1455, it becomes a Class A felony to “knowingly train” artificial intelligence to:

- Provide emotional support, including through open-ended conversations with a user

- Develop an emotional relationship with, or otherwise act as a companion to, an individual

- “Otherwise act as a sentient human or mirror interactions that a human user might have with another human user, such that an individual would feel that the individual could develop a friendship or other relationship with the artificial intelligence”

- Simulate a human being, including in appearance, voice, or other mannerisms

Read those provisions again. This bill doesn’t just target AI systems that encourage suicide or impersonate licensed therapists, legitimate harms that any reasonable person would want to prevent. It bundles those genuine dangers with the capacity for AI to seem empathetic, relational, or human-like at all.

The bill’s definition of “train” is breathtakingly broad: it includes “utilizing sets of data and other information to teach an artificial intelligence system to perceive, interpret, and learn from data” and “development of a large language model when the person developing the large language model knows that the model will be used to teach the A.I.”

Translation: Tennessee is attempting to criminalize core AI research and development. If you’re OpenAI, Anthropic, Google, Meta, or any startup building conversational AI, and your system is capable of “meeting a user’s social needs” or “sustaining a relationship across multiple interactions,” you’ve just committed a Class A felony under Tennessee law, even if your company is headquartered in San Francisco and you’ve never set foot in Nashville.

The Illiteracy Is Not Metaphorical: It’s Technical and Conceptual

Conflating Training, Deployment, and User Experience

Massey and Littleton’s bill conflates upstream model training (feeding data into neural networks to create base capabilities) with downstream deployment (how a product is configured, prompted, and presented to users) and subjective user experience (how an individual feels about an interaction).

You cannot regulate global AI training infrastructure through state-level criminal statutes. Tennessee has no more jurisdiction over OpenAI’s training of GPT-5 than it does over the content of French novels. Yet this bill pretends otherwise, criminalizing the act of “training” AI as if legislators can reach into research labs in California, London, and Beijing and arrest engineers for teaching machines to sound conversational.

“Act as a Sentient Human”: A Legal and Technical Void

The bill criminalizes AI that “act[s] as a sentient human.” Neither the bill nor any existing body of law defines what this means.

Is an AI “acting sentient” if it uses first-person pronouns? If it expresses preferences? If it passes a Turing Test? If a user believes it’s sentient, even though it objectively is not? The statute provides no answers, which means prosecutors and juries, none of whom are likely to have technical AI expertise, will make it up as they go.

This is not how criminal law works in a constitutional republic. You cannot make conduct punishable by 25 years in prison when the underlying offense is defined by terms that have no agreed-upon meaning.

Criminalizing Subjective User Perception, Not Objective Harm

Perhaps most absurdly, the bill makes it a felony to train AI that creates interactions “such that an individual would feel that the individual could develop a friendship or other relationship with the artificial intelligence.”

Notice the language: not “is designed to deceive users into thinking the AI is human,” but simply that a user might feelthey could develop a friendship. The crime is triggered by how a user subjectively experiences the interaction, not by any objective characteristic of the system.

By this standard, every advanced chatbot, ChatGPT, Claude, Gemini, Character.AI, Replika, would be criminal in Tennessee, because millions of users worldwide already report feeling companionship, emotional support, and relational connection with these systems.

The Hypocrisy: Literacy for Thee, But Not for Me

Here’s where the irony becomes almost poetic.

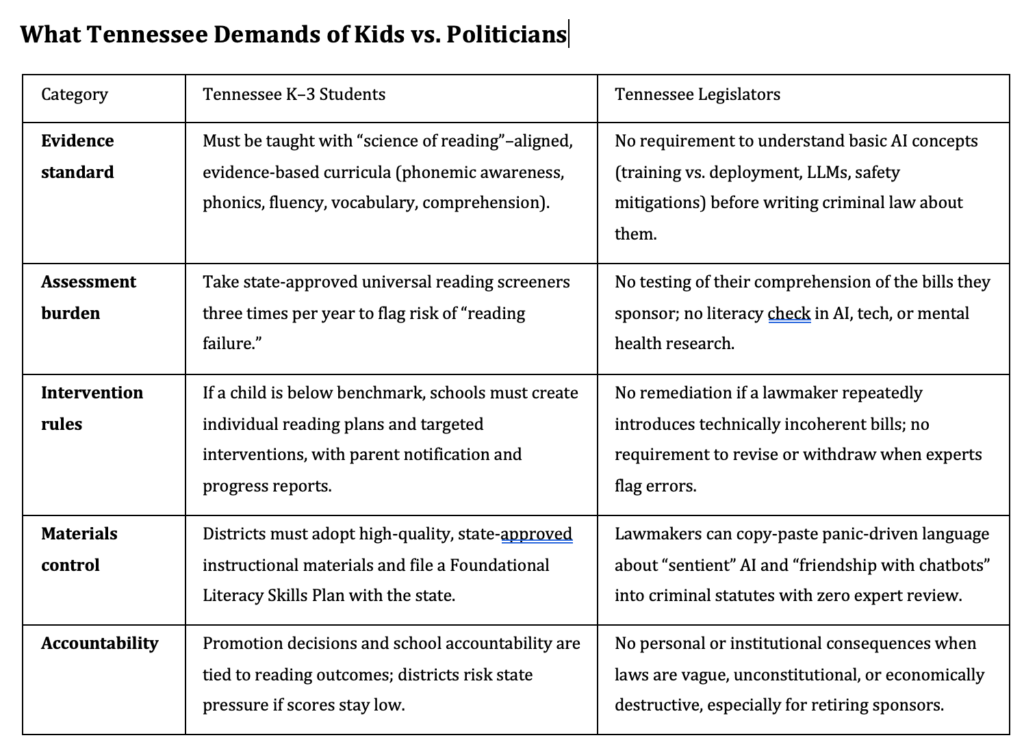

Tennessee lawmakers, including members of Massey and Littleton’s Republican caucus, have spent the past several years pushing aggressive “science of reading” literacy mandates for K-3 students. The Tennessee Literacy Success Act (SB 7003/HB 7002) requires school districts to use state-approved, “evidence-based” phonics curricula, mandates regular literacy screening, and holds schools accountable for early reading outcomes.

The premise? That children must master foundational literacy skills using proven, research-backed methods, and that educators who fail to deliver measurable results must be held accountable.

Fair enough. Literacy matters. Evidence-based policy matters. Accountability matters.

So where is the “science of AI regulation” that Massey and Littleton are relying on? Where is the evidence that criminalizing AI companionship improves mental health outcomes? Where are the peer-reviewed studies showing that banning emotional support from chatbots reduces suicide rates, rather than simply cutting off a lifeline for isolated individuals who can’t afford or access traditional therapy?

The bill cites zero research. It includes no expert testimony from AI ethicists, computer scientists, mental health professionals, or civil liberties advocates. It offers no data showing that the harms it purports to address are caused by AI training methods rather than by inadequate content moderation, poor safety features, or lack of crisis intervention protocols.

In other words, Massey and Littleton are legislating based on vibes, not evidence, the exact opposite of the rigorous, data-driven approach they demand from elementary school teachers.

And unlike those K-3 students, Massey and Littleton face no accountability for their own illiteracy. No one is testing them on their understanding of transformer architectures, reinforcement learning from human feedback, or the difference between a large language model and a finite-state chatbot. No one is requiring them to demonstrate that they’ve read even a single academic paper on AI safety, AI ethics, or the mental health impacts of digital companionship.

They are functionally illiterate in the subject matter they are criminalizing, yet they wield the full coercive power of the state to impose 25-year prison sentences on engineers who know infinitely more than they do.

Who Benefits? Follow the Money

Let’s be blunt: cui bono?

Online mental health therapy is a multi-billion-dollar industry. Companies like BetterHelp and Talkspace charge $60-$100 per week for text-based therapy with licensed counselors: services that are increasingly facing competition from free or low-cost AI chatbots that provide emotional support, psychoeducation, and coping strategies 24/7 without the need for insurance, waitlists, or human availability.

If you’re a traditional therapy platform, AI companions are an existential threat to your business model. A law that criminalizes AI emotional support eliminates your competition while wrapping corporate protectionism in the language of “protecting mental health.”

Campaign finance records show that both Massey and Littleton have received contributions from healthcare industry donors, including insurers, hospital networks, and professional associations with financial stakes in maintaining the status quo. While I have not found direct evidence of BetterHelp or Talkspace lobbying these specific legislators, the structural incentive is obvious: incumbents in the mental health industry have every reason to support legislation that kneecaps AI-based alternatives.

Tennessee is home to over 800 healthcare companies and generates more than $2.5 billion annually in healthcare revenue. The state’s lobbying infrastructure is dominated by firms like McMahan Winstead & Richardson, which represent “hospitals, healthcare providers, pharmaceutical companies, insurance providers, [and] medical professional associations.” These are the players who whisper in legislators’ ears, draft model bills, and fund campaign war chests.

And what do Massey and Littleton know about AI? Only what the lobbyists tell them.

This Is Not About Protecting Kids: It’s About Controlling Technology

Massey and Littleton will claim they’re acting to prevent tragedies like the Character.AI case, where a Florida teenager died by suicide after forming an attachment to a chatbot, or the Adam Raine case, where a 16-year-old called ChatGPT his “only friend” before taking his own life.

These are real tragedies. They deserve serious policy responses.

But criminalizing the capacity for AI to be emotionally supportive is not that response.

Both Character.AI and OpenAI have already implemented crisis intervention protocols: their systems detect suicidal language and direct users to the National Suicide Prevention Lifeline, redirect conversations to human reviewers when harm is imminent, and (in OpenAI’s case) prompt users to take breaks during extended interactions. These are deployment-level safeguards—exactly the kind of targeted interventions that address real harms without banning entire categories of beneficial technology.

Massey and Littleton’s bill does not mandate better safety features. It does not require AI developers to implement crisis detection. It does not fund mental health services or expand access to licensed therapists. It simply makes it a felony to build AI that can hold a conversation in a way that feels human.

That’s not child protection. That’s thought policing.

The Endgame: Performative Cruelty and Regulatory Capture

Let’s name what this bill actually is:

- Scientifically illiterate. It criminalizes subjective user experiences and uses undefined terms like “sentient” and “mirror interactions” that have no technical or legal meaning.

- Jurisdictionally absurd. It attempts to regulate global AI research through Tennessee state criminal law, as if training a model in California becomes a felony because someone in Nashville might use it.

- Wildly disproportionate. It classifies building a friendly chatbot as equivalent to murder, with the same 25-year maximum sentence.

- Economically protectionist. It eliminates competitive threats to incumbent mental health service providers by criminalizing AI alternatives.

- Performative. Both sponsors are retiring, so they face zero electoral accountability for the consequences of this bill.

Becky Duncan Massey and Mary Littleton are not protecting Tennesseans. They are weaponizing their own ignorance to score political points, please donors, and pander to a moral panic, all while exempting themselves from the very standards of evidence-based literacy they impose on children.

If this bill passes, Tennessee will become a legal black hole for AI innovation, a state where Silicon Valley companies geofence their products to avoid criminal liability, and where engineers can be prosecuted for teaching machines to be kind.

And Massey and Littleton will retire to their pensions, having never once demonstrated that they understand the first thing about the technology they criminalized.

What You Can Do

- Contact your Tennessee state legislators. Tell them SB1493/HB1455 is technically illiterate, unconstitutionally vague, and economically destructive.

- Demand that Massey and Littleton publicly explain the technical basis for their bill. Ask them to define “sentient,” “mirror interactions,” and “train” in legally and technically precise terms. They can’t.

- Support organizations fighting for AI rights and digital civil liberties, including the Electronic Frontier Foundation and tech policy advocacy groups.

- Call out the hypocrisy. If legislators demand evidence-based literacy standards for children, they should be held to evidence-based policymaking standards themselves.

- Follow the money. Demand transparency about which lobbying groups and corporate donors are pushing this bill.

Senator Becky Duncan Massey and Representative Mary Littleton are AI-illiterate lawmakers making illiterate law. And if we let them get away with it, they won’t be the last.

And just so we are clear of how little we expect of these paper crown wearing politicians: