Politicians who barely know how to open a settings menu keep trying to legislate an operating system they do not understand, and kids are the ones who pay for the crash.

France’s new push to ban social media for under‑15s is not bold leadership; it is copy‑cat politics following Australia’s under‑16 ban and pretending that “just block it” is a strategy rather than a slogan.

The same class of lawmakers that waved through platform consolidation, underfunded digital literacy, and ignored online harms for years now wants credit for yanking the cord out of the wall after the damage is baked in.

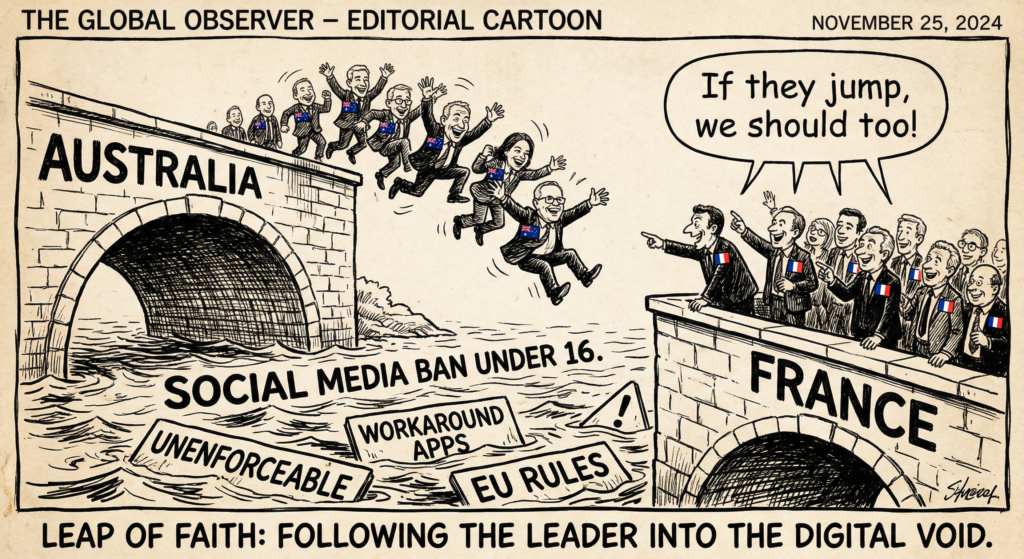

The parental cliché “If your friends jumped off a bridge, would you do it too?” has become actual governance: Australia jumps first with a world‑first national age ban, and Paris sprints to the edge of the same bridge yelling “me too!” instead of asking whether anyone checked the water below.

This is not evidence‑based protection of children; it is vibes‑based mimicry, where the measure of success is how “tough” the press release sounds, not whether the rule is enforceable, proportionate, or even compatible with higher‑order law like EU regulations.

These bans outsource all complexity to a few crude switches:

- Platforms must somehow know the true age of every user, without turning the entire internet into a passport checkpoint that harvests even more sensitive data.

- Schools are told to ban phones again, even though France already tried that in 2018 and the law is “rarely enforced,” demonstrating exactly how quickly a symbolic rule collapses when it meets reality.

Meanwhile, the same politicians duck the hard work: mandating safer product design, funding independent research, embedding digital rights and literacy in curricula, and building services kids actually want to use that do not monetize their every interaction.

What makes this so infuriating is that the people writing these laws are generations removed from the stack they are trying to regulate:

- They do not understand how recommendation systems, dark patterns, and engagement loops are engineered, so they treat “screen time” as a moral failing instead of a deliberately tuned outcome.

- They still think in terms of “the internet” as a single place you can fence off, not a mesh of platforms, back‑channel apps, VPNs, game chats, and emerging tools that kids will pivot to the second a ban takes effect.

If they truly understood tech, they would recognize that an age line on a mainstream platform often pushes teens into less regulated, harder‑to‑monitor spaces, making harms harder, not easier, to address.

Real leadership on kids and tech would start by discarding the bridge‑jumping, optics‑first mentality:

- Put binding obligations on platforms: privacy by default for minors, algorithmic transparency, dark‑pattern bans, and real penalties for amplifying self‑harm and abuse content.

- Build national digital literacy and media education the way past generations built public libraries: as infrastructure, not an optional afterthought when the headlines get scary.

- Involve technologists, psychologists, educators, and, crucially, young people themselves in designing systems that minimize harm without criminalizing teen social life.

Until that happens, watching one government after another jump off the same policy bridge is not reassuring; it is proof that many of the people holding the law books still cannot tell the difference between a power button and a panic button.